A Brief Introduction of DECICE Framework Architecture

DECICE aims to develop an AI-based, open and portable cloud management framework for automatic and adaptive optimization and deployment of applications in a federated infrastructure, including computing from the very large (e.g., HPC systems) to the very small (e.g., IoT sensors connected on the edge).

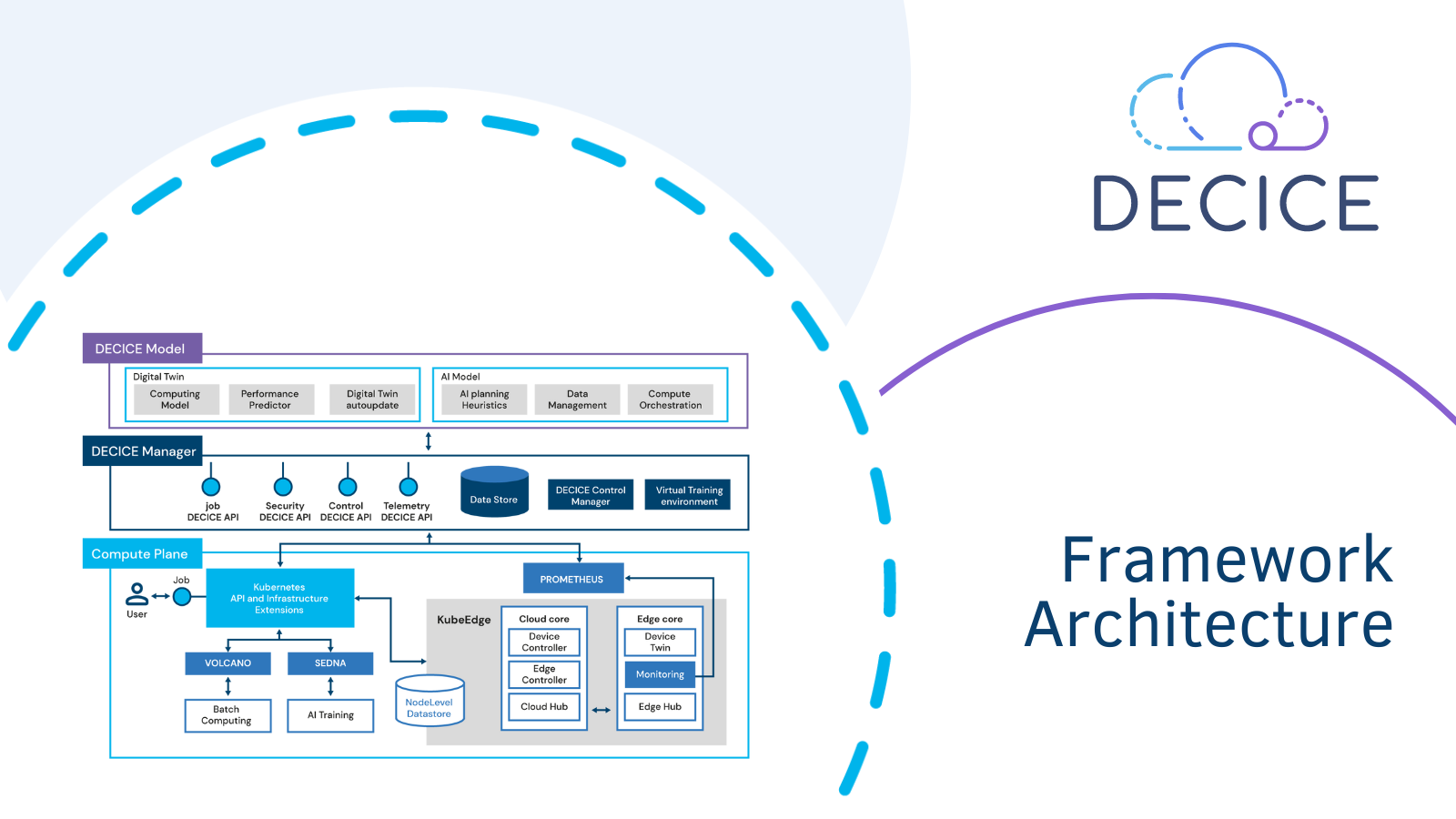

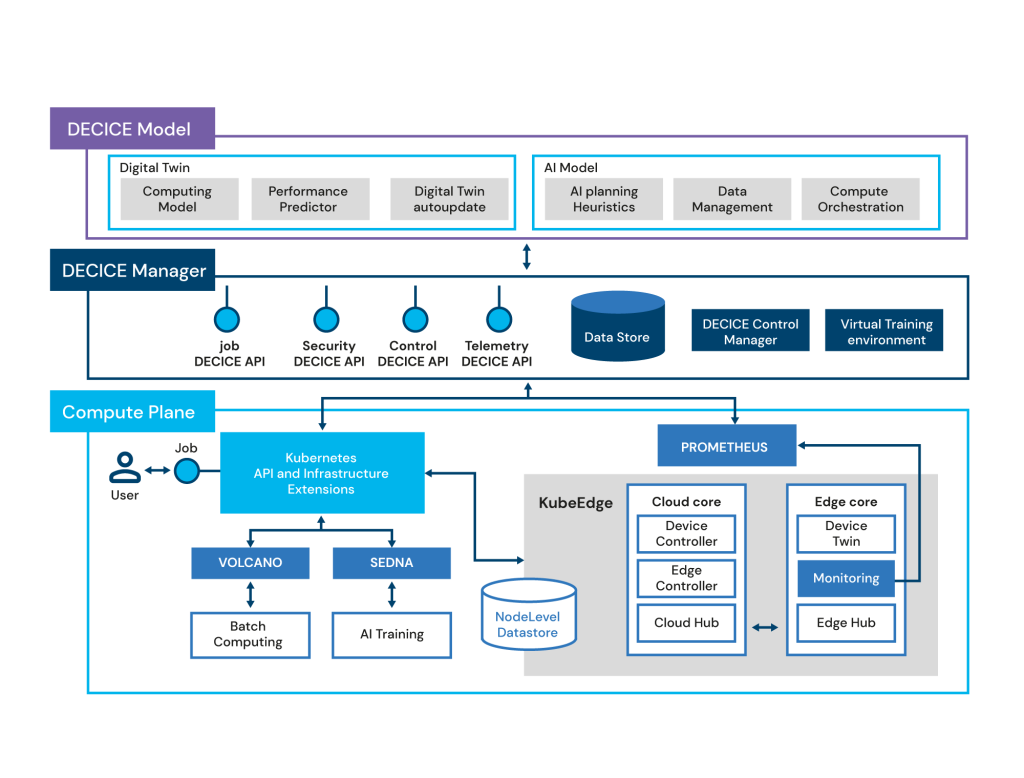

Internally, DECICE is structured into three major planes as shown below:

- Compute Plane, responsible for running the user workloads, gathering telemetry, and for AI model training

- DECICE Manager responsible for storing data, controlling application placements and movement, and controlling AI model training

- DECICE model responsible for maintaining digital twins of hardware in the control plane and making scheduling decisions about resource allocation for applications.

DECICE Model

Dynamic Digital Twin: A Digital Twin is a virtual representation of an actual or potential system at micro and macro level. It models the complete lifecycle of the system using simulation, real-time monitoring and forecasting which is then used to make decisions. Creating a Digital Twin of the heterogeneous cloud-edge infrastructure and the deployed applications is one of the main goals of the project. The DECICE twin models the compute, memory, storage and network resources and the application tasks using simulation and real time monitoring are used to continuously update the model. AI models are then used to forecast the system behaviour in case of new deployment or a failure. AI modeling is also used to continuously predict the application performance based on the current state of the system and makes suggestions of optimisation or adaptation to increase performance, energy efficiency, reliability and throughput. These suggestions are then acted upon by the DECICE Manager.

DECICE Manager

The DECICE Manager integrates the Digital Twin and AI models with the orchestration system (e.g. Kubernetes) through its standard API. This integration can be adapted to make the DECICE framework portable across orchestrator frameworks to allow integration with any commercial or open source cloud solutions. Furthermore, the DECICE Manager also includes a data repository for storing metadata of infrastructure and applications as well as monitoring data. This data will be used to update the Digital Twin and train the AI-models. Moreover, the manager also provides a synthetic training and test environment to simulate different infrastructure and application deployment scenarios for the training of AI-models.

The manager enables all its functionalities in the framework through the following APIs: Job/End-User, Control, Security and Telemetry API. Primarily these APIs act as a translation layer between the generic API presented to users and implementation-specific APIs. Building generic interfaces that can safely abstract and interface with multiple underlying implementations is a challenge. Doing so requires creating an ontology to represent all underlying implementations, their capabilities, and a common grammar. Further, this ontology must be extensible, as existing underlying implementations may change or new underlying implementations may be created.

Compute Plane

The compute plane exists to run the user workloads. It is built on existing open source technology, which is then integrated with the DECICE components in the control plane. The primary component in the compute plane is Kubernetes, outlined above. It provides the basic building blocks for running containerized jobs over multiple physical systems. Kuberenetes is further extended with KubeEdge in order to allow the use and management of autonomous edge devices. Additionally, Prometheus is used to gather metrics from devices via Kubernetes/KubeEdge interfaces. Finally, SEDNA provides AI model training capabilities, while Volcano provides the ability to run HPC jobs.

Author: Mirac Aydin

Links

https://www.uni-goettingen.de/

Keywords

GWDG, HPC, DECICE, KUBERNETES, CLOUD, AI, DIGITAL TWIN