Enhancing Rancher Kubernetes Clusters with KubeEdge: Early Experience and Prometheus Integration

In this article, we share our early experience with extending the existing Rancher Kubernetes cluster with KubeEdge and the provisioning of Prometheus node exporters to KubeEdge.

DECICE framework[1] relies on monitoring and telemetry information of the infrastructure to represent the state of the dynamic digital twin of the entire continuum from public and private cloud, HPC, to edge and IoT devices. This representation of the digital twin affects scheduling decisions of the framework, such as dynamic load balancing and data placements. Therefore, it is vital to accurately collect monitoring metrics of various devices in the

continuum, specifically in the Kubernetes orchestrated environments.

Rancher[2] is an open-source container management platform that simplifies the deployment and management of Kubernetes clusters across different environments. It provides a user-friendly interface, centralized management, and additional features like monitoring, authentication, and multi-cluster management. At HLRS, we use Rancher to provide Kubernetes clusters on-demand for various projects. Rancher is configured[3] to use our private OpenStack cloud for resource provisioning, such as virtual machines (VMs) for Kubernetes nodes and volumes for persistent storage.

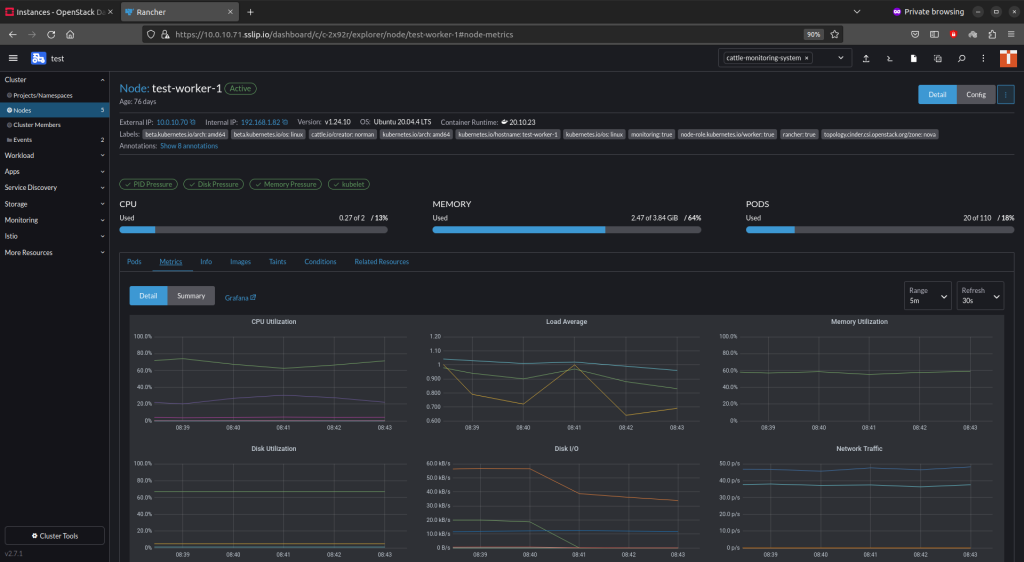

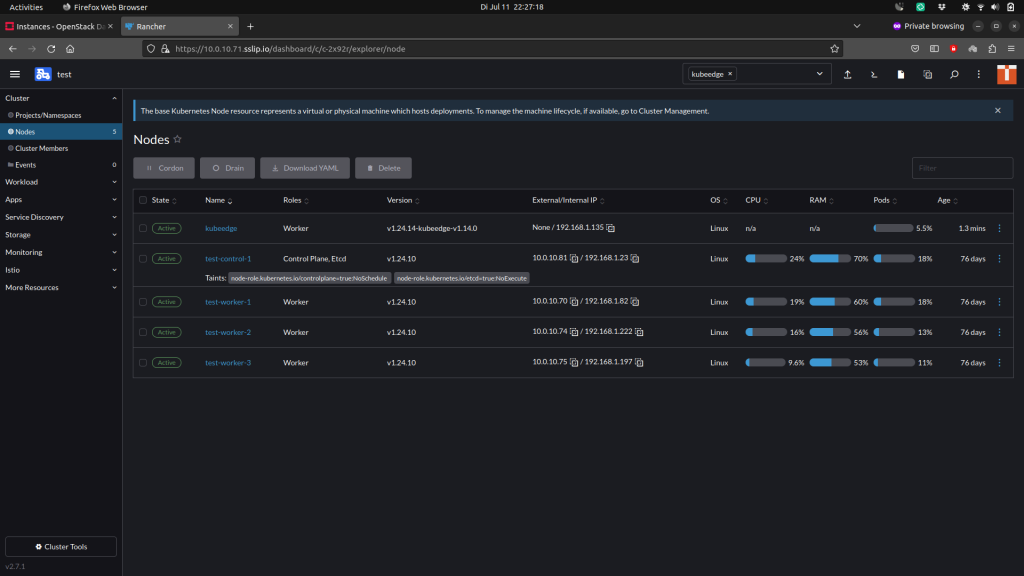

We provisioned a Kubernetes cluster (v1.24) for our test setup with one control and three worker nodes. We installed Prometheus on these nodes using Rancher monitoring Helm charts[4]. The Rancher UI then displays the monitoring information (see Figure 1). Additionally, we provisioned a VM for the KubeEdge (v1.14) node, which will be added to the cluster.

It should be noted that Rancher creates and manages a pool of Kubernetes nodes. Since we manually add the KubeEdge node, this node will be outside of Rancher’s pool of nodes. However, Rancher will try to schedule the default Kubernetes system pods on the KubeEdge node. It is therefore advised to provide the means to avoid that, e.g. explicitly label Rancher nodes and accordingly provide additional node selectors for system daemon sets[5], such that the pods will be scheduled only on the Rancher nodes.

KubeEdge consists of Cloud Core and Edge Core[6]. In this context, Cloud Core is our existing Kubernetes cluster, whereas Edge Core is the KubeEdge node. We followed the installation manual[7] of KubeEdge, additionally providing containerd runtime and configuring Container Network Interface (CNI) plugins[8]. After executing the command for joining instances (“keadm join”), the Rancher UI shows that the KubeEdge node (“kubeedge”) was added to the list of all nodes (as shown in Figure 2).

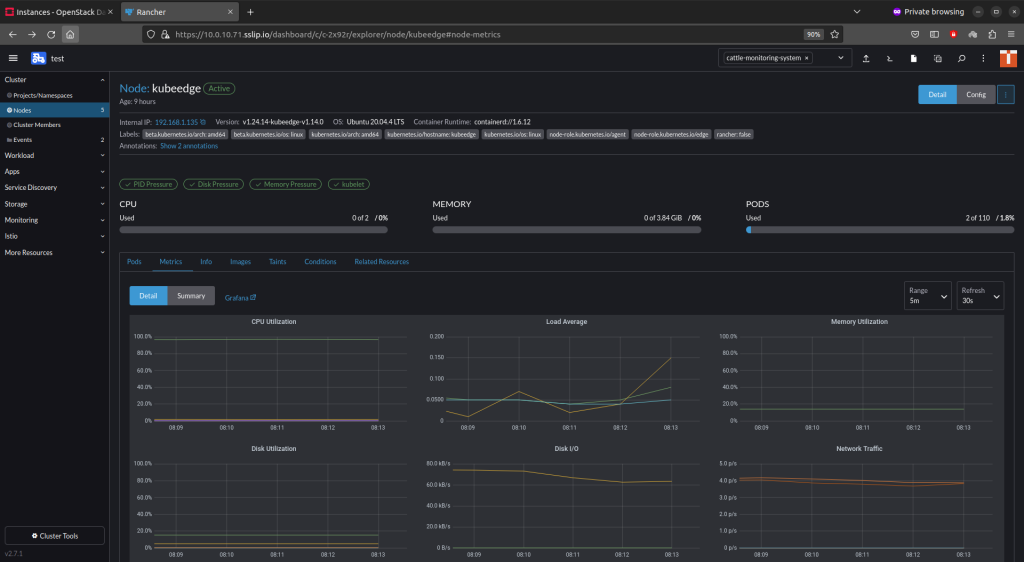

Once the KubeEdge node joins the cluster, the Prometheus daemon set kicks in and tries to schedule a node exporter pod on the KubeEdge node to collect the monitoring data. The monitoring information can then be visible via Rancher UI, as depicted in Figure 3.

Overall, this experiment helped us retrieve monitoring data of the Kubernetes cluster’s nodes and the KubeEdge node. For future work, we will be looking at the integration of the monitoring system[9] with DECICE Telemetry API and Monitoring Metric Storage, as well as tackling the nature of edge and IoT nodes: optimization of data collection frequency, synchronization, low overhead and reliability for edge devices that have poor connectivity.

Author: Kamil Tokmakov (HLRS)

Reference

[1] Kunkel, Julian, et al. “DECICE: Device-Edge-Cloud Intelligent Collaboration Framework.” arXiv preprint arXiv:2305.02697 (2023).

[2] https://www.rancher.com/

[3] https://github.com/kubernetes/cloud-provider-openstack

[4] https://ranchermanager.docs.rancher.com/reference-guides/monitoring-v2-configuration/helm-chart-options

[5] A daemon set ensures that all (or some) nodes run a copy of a pod. As nodes are added to the cluster, pods are added to them. For more details, please visit the official documentation: https://kubernetes.io/docs/concepts/workloads/controllers/daemonset/

[6] https://github.com/kubeedge/kubeedge#architecture

[7] https://kubeedge.io/en/docs/setup/keadm/

[8] https://github.com/containerd/containerd/blob/main/docs/getting-started.md

[9] https://www.decice.eu/project-news/hlrs/

Links

Keywords

HLRS, DECICE, Kubernetes, KubeEdge, Rancher, Monitoring