From Edge to Cloud to HPC: Cluster Automation and Integration of Advanced Scheduling Tools in Kubernetes

The integration of AI-driven scheduling from the DECICE EU project enhances Kubernetes performance, scalability, and resource utilization, especially in High-Performance Computing (HPC) environments. By automating cluster deployment with Ansible and Kubespray, and integrating tools like Interlink and Volcano, we optimize resource allocation and job scheduling. Additionally, Kubedge connects edge devices to Kubernetes, extending functionality to the edge. We’re also exploring the integration of Sedna and Kubeflow to enhance AI model training and machine learning workflows. This combined approach aims to deliver efficient, scalable, and intelligent orchestration for modern workloads.

The DECICE EU project aims to revolutionize scheduling in Kubernetes clusters by introducing an advanced AI-driven scheduler. This initiative seeks to enhance the performance, scalability, and resource utilization within cloud-native environments, focusing on dynamically optimizing workloads based on their behavior and resource needs.

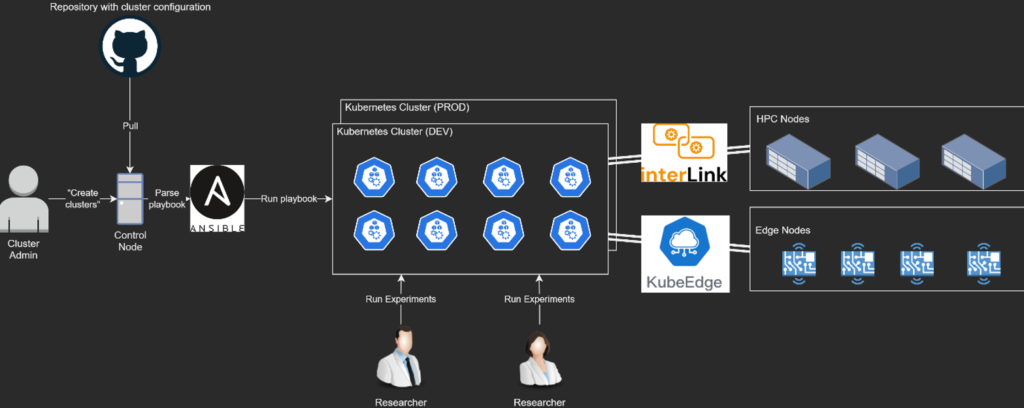

Large-scale infrastructures are built on top of automation and efficient tools to quickly deploy and dismantle clusters, with a focus on reliably setting up the complete software stack in a reproducible manner with minimal human intervention. To address this, our work automates cluster deployment on High-Performance Computing (HPC) resources using tools like Ansible and Kubespray, which provide the foundational infrastructure for scaling Kubernetes deployments. This automation frees us to focus on strategic improvements, such as integrating the Kubernetes scheduler with advanced tools like Interlink [3] and Volcano [4]. Moreover, this aligns with the research-driven nature of the DECICE project, where ensuring consistency in experiments often necessitates starting from a clean slate for reliable results.

Another tool integrated into our cluster is Interlink, which enables connectivity between Kubernetes and non-Kubernetes nodes, such as an HPC cluster, other batch systems, or virtual machines. This opens the doors to workload distribution and resource optimization across diverse environments, allowing the scheduler to efficiently allocate jobs to the most suitable nodes, whether they are within Kubernetes or external systems. This capability is particularly valuable in heterogeneous environments, ensuring optimal performance and resource utilization.

Talking about scheduling, we also use Volcano: a Kubernetes-native batch job scheduler, provides advanced scheduling capabilities such as job queue management, resource-aware scheduling, and priority-based scheduling. These integrations work together to enhance the default Kubernetes scheduler, making it more capable of handling diverse, resource-intensive workloads often seen in AI and HPC applications.

Resource heterogeneity wouldn’t be complete without accounting for edge and IoT devices. Our work also explores the use of Kubedge [1] to connect edge devices to our hybrid Kubernetes/HPC cluster. This is essential for applications that demand low-latency processing, real-time decision-making, and data/processing co-location. Kubedge is an open-source framework facilitating seamless communication between edge devices and the centralized Kubernetes environment, enabling us to extend our infrastructure to the network’s edge while maintaining control and scalability at the core.

Looking ahead, we are evaluating the integration of two open-source tools, Sedna [2] and Kubeflow [5], to further enhance the machine learning lifecycle within our automated Kubernetes deployments. Sedna, with its focus on federated learning and distributed AI, could play a pivotal role in enabling cross-cluster AI model training, while Kubeflow provides a robust end-to-end platform for machine learning workflows, ensuring that from model training to deployment, our infrastructure supports cutting-edge AI research and application development.